Table of Contents

- 15.1. Replication Configuration

- 15.2. Replication Solutions

- 15.2.1. Using Replication for Backups

- 15.2.2. Using Replication with Different Master and Slave Storage Engines

- 15.2.3. Using Replication for Scale-Out

- 15.2.4. Replicating Different Databases to Different Slaves

- 15.2.5. Improving Replication Performance

- 15.2.6. Switching Masters During Failover

- 15.2.7. Setting Up Replication Using SSL

- 15.3. Replication Notes and Tips

- 15.4. Replication Implementation Overview

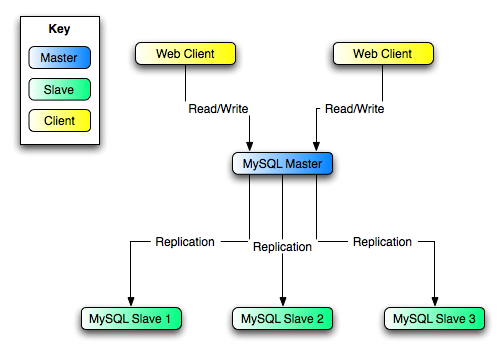

Replication enables data from one MySQL database server (called the master) to be replicated to one or more MySQL database servers (slaves). Replication is asynchronous - your replication slaves do not need to be connected permanently to receive updates from the master, which means that updates can occur over long-distance connections and even temporary solutions such as a dial-up service. Depending on the configuration, you can replicate all databases, selected databases and even selected tables within a database.

The target uses for replication in MySQL include:

Scale-out solutions - spreading the load among multiple slaves to improve performance. In this environment, all writes and updates must take place on the master server. Reads, however, may take place on one or more slaves. This model can improve the performance of writes (since the master is dedicated to updates), while dramatically increasing read speed across an increasing number of slaves.

Data security - because data is replicated to the slave, and the slave can pause the replication process, it is possible to run backup services on the slave without corrupting the corresponding master data.

Analytics - live data can be created on the master, while the analysis of the information can take place on the slave without affecting the performance of the master.

Long-distance data distribution - if a branch office would like to work with a copy of your main data, you can use replication to create a local copy of the data for their use without requiring permanent access to the master.

Replication in MySQL features support for one-way, asynchronous replication, in which one server acts as the master, while one or more other servers act as slaves. This is in contrast to the synchronous replication which is a characteristic of MySQL Cluster (see Chapter 16, MySQL Cluster).

There are a number of solutions available for setting up replication between two servers, but the best method to use depends on the presence of data and the engine types you are using. For more information on the available options, see Section 15.1.1, “How to Set Up Replication”.

Replication is controlled through a number of different options and variables. These control the core operation of the replication, timeouts and the databases and filters that can be applied on databases and tables. For more information on the available options, see Section 15.1.2, “Replication Startup Options and Variables”.

You can use replication to solve a number of different problems, including problems with performance, supporting the backup of different databases and for use as part of a larger solution to alleviate system failures. For information on how to address these issues, see Section 15.2, “Replication Solutions”.

For notes and tips on how different data types and statements are treated during replication, including details of replication features, version compatibility, upgrades, and problems and their resolution, including an FAQ, see Section 15.3, “Replication Notes and Tips”.

Detailed information on the implementation of replication, how replication works, the process and contents of the binary log, background threads and the rules used to decide how statements are recorded and replication, see Section 15.4, “Replication Implementation Overview”.

MySQL Enterprise The MySQL Enterprise Monitor provides numerous advisors that give immediate feedback about replication-related problems. For more information see http://www.mysql.com/products/enterprise/advisors.html.

Replication between servers in MySQL works through the use of the binary logging mechanism. The MySQL instance operating as the master (the source of the database changes) writes updates and changes to the database to the binary log. The information in the binary log is stored in different logging formats according to the database changes being recorded. Slaves are configured to read the binary log from the master and to execute the events in the binary log on the slave's local database.

The Master is dumb in this scenario. Once binary logging has been enabled, all statements are recorded in the binary log. Each slave will receive a copy of the entire contents of the binary log. It is the responsibility of the slave to decide which statements in the binary log should be executed; you cannot configure the master to log only certain events. If you do not specify otherwise, all events in the master binary log are executed on the slave. If required, you can configure the slave to only process events that apply to particular databases or tables.

Slaves keep a record of the binary log file and position within the log file that they have read and processed from the master. This means that multiple slaves can be connected to the master and executing different parts of the same binary log. Because the slaves control this process, individual slaves can be connected and disconnected from the server without affecting the master's operation. Also, because each slave remembers the position within the binary log, it is possible for slaves to be disconnected, reconnect and then 'catch up' by continuing from the recorded position.

Both the master and each slave must be configured with a unique id

(using the server-id option). In addition, the

slave must be configured with information about the master host

name, log file name and position within that file. These details can

be controlled from within a MySQL session using the CHANGE

MASTER statement. The details are stored within the

master.info file.

In this section the setup and configuration required for a replication environment is described, including step-by-step instructions for creating a new replication environment. The major components of this section are:

For a guide to setting up two or more servers for replication see Section 15.1.1, “How to Set Up Replication”. This section deals with the setup of the systems and provides methods for copying data between the master and slaves.

Detailed information on the different configuration options and variables that apply to replication is provided in Section 15.1.2, “Replication Startup Options and Variables”.

Once started, the replication process should require little administration or monitoring. However, for advice on common tasks that you may want to executed, see Section 15.1.3, “Common Replication Administration Tasks”.

- 15.1.1.1. Creating a User for Replication

- 15.1.1.2. Setting the Replication Master Configuration

- 15.1.1.3. Setting the Replication Slave Configuration

- 15.1.1.4. Obtaining the Master Replication Information

- 15.1.1.5. Creating a Data Snapshot Using

mysqldump - 15.1.1.6. Creating a Data Snapshot Using Raw Data Files

- 15.1.1.7. Setting Up Replication with New Master and Slaves

- 15.1.1.8. Setting Up Replication with Existing Data

- 15.1.1.9. Introducing Additional Slaves to an Existing Replication Environment

- 15.1.1.10. Setting the Master Configuration on the Slave

This section describes how to set up complete replication of a MySQL server. There are a number of different methods for setting up replication, and the exact method that you use will depend on how you are setting up replication, and whether you already have data within your master database.

There are some generic tasks which may be required for all replication setups:

You may want to create a separate user that will be used by your slaves to authenticate with the master to read the binary log for replication. The step is optional. See Section 15.1.1.1, “Creating a User for Replication”.

You must configure the master to support the binary log and configure a unique ID. See Section 15.1.1.2, “Setting the Replication Master Configuration”.

You must configure a unique ID for each slave that you want to connect to the Master. See Section 15.1.1.3, “Setting the Replication Slave Configuration”.

Before starting a data snapshot or the replication process, you should record the position of the binary log on the master. You will need this information when configuring the slave so that the slave knows where within the binary log to start executing events. See Section 15.1.1.4, “Obtaining the Master Replication Information”.

If you already have data on your Master and you want to synchronize your slave with this base data, then you will need to create a data snapshot of your database. You can create a snapshot using

mysqldump(see Section 15.1.1.5, “Creating a Data Snapshot Usingmysqldump”) or by copying the data files directly (see Section 15.1.1.6, “Creating a Data Snapshot Using Raw Data Files”).You will need to configure the slave with the Master settings, such as the hostname, login credentials and binary log name and positions. See Section 15.1.1.10, “Setting the Master Configuration on the Slave”.

Once you have configured the basic options, you will need to follow the instructions for your replication setup. A number of alternatives are provided:

If you are setting up a new MySQL master and one or more slaves, then you need only set up the configuration, as you have no data to exchange. For guidance on setting up replication in this situation, see Section 15.1.1.7, “Setting Up Replication with New Master and Slaves”.

If you are already running a MySQL server, and therefore already have data that will need to be transferred to your slaves before replication starts, have not previously configured the binary log and are able to shut down your MySQL server for a short period during the process, see Section 15.1.1.8, “Setting Up Replication with Existing Data”.

If you are setting up additional slaves to an existing replication environment then you can set up the slaves without affecting the master. See Section 15.1.1.9, “Introducing Additional Slaves to an Existing Replication Environment”.

If you want to administer a MySQL replication setup, we suggest that you read this entire chapter through and try all statements mentioned in Section 12.6.1, “SQL Statements for Controlling Master Servers”, and Section 12.6.2, “SQL Statements for Controlling Slave Servers”. You should also familiarize yourself with the replication startup options described in Section 15.1.2, “Replication Startup Options and Variables”.

Note

Note that certain steps within the setup process require the

SUPER privilege. If you do not have this

privilege then enabling replication may not be possible.

Each Slave must connect to the Master using a standard username

and password. The user that you use for this operation can be

any user, providing they have been granted the

REPLICATION SLAVE privilege.

You do not need to create a specific user for replication.

However, you should be aware that the username and password will

be stored in plain text within the

master.info file. Therefore you may want to

create a user that only has privileges for the replication

process.

To create a user or grant an existing user the privileges

required for replication use the GRANT

statement. If you create a user solely for the purposes of

replication then that user only needs the REPLICATION

SLAVE privilege. For example, to create a user,

repl, that allows all hosts within the domain

mydomain.com to connect for replication:

mysql> GRANT REPLICATION SLAVE ON *.*

-> TO 'repl'@'%.mydomain.com' IDENTIFIED BY 'slavepass';

See Section 12.5.1.3, “GRANT Syntax”, for more information on the

GRANT statement.

You may wish to create a different user for each slave, or use

the same user for each slave that needs to connect. As long as

each user that you want to use for the replication process has

the REPLICATION SLAVE privilege you can

create as many users as you require.

For replication to work you must enable binary logging on the master. If binary logging is not enabled, replication will not be possible as it is the binary log that is used to exchange data between the master and slaves.

Each server within a replication group must have a unique

server-id. The server-id is used to identify

individual servers within the group, and must be positive

integer between 1 and (232)-1). How

you organize and select the numbers is entirely up to you.

To configure both these options you will need to shut down your

MySQL server and edit the configuration of the

my.cnf or my.ini file.

You will need to add the following options to the configuration

file within the [mysqld] section. If these

options already exist, but are commented out, uncomment the

options and alter them according to your needs. For example, to

enable binary logging, using a log filename prefix of mysql-bin,

and setting a server ID of 1:

[mysqld] log-bin=mysql-bin server-id=1

Note

For the greatest possible durability and consistency in a

replication setup using InnoDB with

transactions, you should use

innodb_flush_log_at_trx_commit=1 and

sync_binlog=1 in the master

my.cnf file.

Note

Ensure that the skip-networking option has

not been enabled on your replication master. If networking has

been disabled, then your slave will not able to communicate

with the master and replication will fail.

The only option you must configure on the slave is to set the unique server ID. If this option is not already set, or the current value conflicts with the value that you have chosen for the master server, then you should shut down your slave server, and edit the configuration to specify the server id. For example:

[mysqld] server-id=2

If you are setting up multiple slaves, each one must have a

unique server-id value that differs from that

of the master and from each of the other slaves. Think of

server-id values as something similar to IP

addresses: These IDs uniquely identify each server instance in

the community of replication partners.

If you do not specify a server-id value, it

is set to 1 if you have not defined

master-host; otherwise it is set to 2. Note

that in the case of server-id omission, a

master refuses connections from all slaves, and a slave refuses

to connect to a master. Thus, omitting

server-id is good only for backup with a

binary log.

You do not have to enable binary logging on the slave for replication to be enabled. However, if you enable binary logging on the slave then you can use the binary log for data backups and crash recovery on the slave, and also use the slave as part of a more complex replication topology.

To configure replication on the slave you must determine the masters current point within the master binary log. You will need this information so that when the slave starts the replication process, it is able to start processing events from the binary log at the correct point.

If you have existing data on your master that you want to synchronize on your slaves before starting the replication process, then you must stop processing statements on the master, obtain the current position, and then dump the data, before allowing the master to continue executing statements. If you do not stop the execution of statements then the data dump, the master status information that you use will not match and you will end up with inconsistent or corrupted databases on the slaves.

To get the master status information, follow these steps:

Start the command line client and flush all tables and block write statements by executing the

FLUSH TABLES WITH READ LOCKstatement:mysql> FLUSH TABLES WITH READ LOCK;

For

InnoDBtables, note thatFLUSH TABLES WITH READ LOCKalso blocksCOMMIToperations.Warning

Leave the client from which you issued the

FLUSH TABLESstatement running so that the read lock remains in effect. If you exit the client, the lock is released.Use the

SHOW MASTER STATUSstatement to determine the current binary log name and offset on the master:mysql >

SHOW MASTER STATUS;+---------------+----------+--------------+------------------+ | File | Position | Binlog_Do_DB | Binlog_Ignore_DB | +---------------+----------+--------------+------------------+ | mysql-bin.003 | 73 | test | manual,mysql | +---------------+----------+--------------+------------------+The

Filecolumn shows the name of the log andPositionshows the offset within the file. In this example, the binary log file ismysql-bin.003and the offset is 73. Record these values. You need them later when you are setting up the slave. They represent the replication coordinates at which the slave should begin processing new updates from the master.If the master has been running previously without binary logging enabled, the log name and position values displayed by

SHOW MASTER STATUSor mysqldump --master-data will be empty. In that case, the values that you need to use later when specifying the slave's log file and position are the empty string ('') and4.

You now have the information you need to enable the slave to start reading from the binary log in the correct place to start replication.

If you have existing data that needs be to synchronized with the

slave before you start replication, leave the client running so

that the lock remains in place and then proceed to

Section 15.1.1.5, “Creating a Data Snapshot Using mysqldump”, or

Section 15.1.1.6, “Creating a Data Snapshot Using Raw Data Files”.

If you are setting up a brand new master and slave replication group, then you can exit the client and release the locks.

One way to create a snapshot of the data in an existing master

database is to use the mysqldump tool. Once

the data dump has been completed, you then import this data into

the slave before starting the replication process.

To obtain a snapshot of the data using

mysqldump:

If you haven't already locked the tables on the server to prevent queries that update data from executing:

Start the command line client and flush all tables and block write statements by executing the

FLUSH TABLES WITH READ LOCKstatement:mysql> FLUSH TABLES WITH READ LOCK;

Remember to use

SHOW MASTER STATUSand record the binary log details for use when starting up the slave. The point in time of your snapshot and the binary log position must match. See Section 15.1.1.4, “Obtaining the Master Replication Information”.In another session, use

mysqldumpto create a dump either of all the databases you want to replicate, or by selecting specific databases individually. For example:shell> mysqldump --all-databases --lock-all-tables >dbdump.db

An alternative to using a bare dump, is to use the

--master-dataoption, which will automatically append theCHANGE MASTERstatement required on the slave to start the replication process.shell> mysqldump --all-databases --master-data >dbdump.db

When choosing databases to include in the dump, remember that you will need to filter out databases on each slave that you do not want to include in the replication process.

You will need either to copy the dump file to the slave, or to use the file from the master when connecting remotely to the slave to import the data.

If your database is particularly large then copying the raw data

files may be more efficient than using

mysqldump and importing the file on each

slave.

However, using this method with tables in storage engines with complex caching or logging algorithms may not give you a perfect “in time” snapshot as cache information and logging updates may not have been applied, even if you have acquired a global read lock. How the storage engine responds to this depends on its crash recovery abilities.

For example, if you are using InnoDB tables,

you should use the InnoDB Hot

Backup tool to obtain a consistent snapshot. This tool

records the log name and offset corresponding to the snapshot to

be later used on the slave. Hot Backup is a

non-free (commercial) tool that is not included in the standard

MySQL distribution. See the InnoDB

Hot Backup home page at

http://www.innodb.com/hot-backup for detailed

information.

Otherwise, you can obtain a reliable binary snapshot of

InnoDB tables only after shutting down the

MySQL Server.

To create a raw data snapshot of MyISAM

tables you can use standard copy tools such as

cp or copy, a remote copy

tool such as scp or rsync

an archiving tool such as zip or

tar, or a file system snapshot tool such as

dump, providing that your MySQL data files

exist on a single filesystem. If you are only replicating

certain databases then make sure you only copy those files that

related to those tables. (For InnoDB, all

tables in all databases are stored in a single file unless you

have the innodb_file_per_table option enabled.)

You may want to specifically exclude the following files from your archive:

Files relating to the

mysqldatabase.The

master.infofile.The master's binary log files.

Any relay log files.

To get the most consistent results with a raw data snapshot you should shut down the server during the process, as below:

Acquire a read lock and get the master's status. See Section 15.1.1.4, “Obtaining the Master Replication Information”.

In a separate session, shut down the MySQL server:

shell> mysqladmin shutdown

Take a copy of the MySQL data files. Examples are shown below for common solutions - you need to choose only one of these solutions:

shell> tar cf

/tmp/db.tar./datashell> zip -r/tmp/db.zip./datashell> rsync --recursive./data/tmp/dbdataStart up the MySQL instance on the master.

If you are not using InnoDB tables, you can

get a snapshot of the system from a master without shutting down

the server as described in the following steps:

Acquire a read lock and get the master's status. See Section 15.1.1.4, “Obtaining the Master Replication Information”.

Take a copy of the MySQL data files. Examples are shown below for common solutions - you need to choose only one of these solutions:

shell> tar cf

/tmp/db.tar./datashell> zip -r/tmp/db.zip./datashell> rsync --recursive./data/tmp/dbdataIn the client where you acquired the read lock, free the lock:

mysql> UNLOCK TABLES;

Once you have created the archive or copy of the database, you will need to copy the files to each slave before starting the slave replication process.

Setting up replication with a new Master and Slaves (i.e. with no existing data) is the easiest and most straightforward method for setting up replication.

You can also use this method if you are setting up new servers and have an existing dump of the databases that you want to load into your replication configuration. By loading the data onto a new master, the data will be automatically replicated to the slaves.

To set up replication between a new master and slave:

Configure the MySQL master with the necessary configuration properties. See Section 15.1.1.2, “Setting the Replication Master Configuration”.

Start up the MySQL master.

Setup a user, see Section 15.1.1.1, “Creating a User for Replication”.

Obtain the master status information. See Section 15.1.1.4, “Obtaining the Master Replication Information”.

Free the read lock:

mysql> UNLOCK TABLES;

On the slave, edit the MySQL configuration. See Section 15.1.1.3, “Setting the Replication Slave Configuration”.

Start up the MySQL slave.

Execute the

CHANGE MASTERcommand to set the master replication server configuration.

Because there is no data to load or exchange on a new server configuration you do not need to copy or import any information.

If you are setting up a new replication environment using the data from an existing database server, you will now need to run the dump file on the master. The database updates will automatically be propagated to the slaves:

shell> mysql -h master < fulldb.dump

When setting up replication with existing data, you will need to decide how best to get the data from the master to the slave before starting the replication service.

The basic process for setting up replication with existing data is as follows:

If you have not already configured the

server-idand binary logging, you will need to shut down your master to configure these options. See Section 15.1.1.2, “Setting the Replication Master Configuration”.If you have to shut down your master database, then this is a good opportunity to take a snapshot of the database. You should obtain the master status (see Section 15.1.1.4, “Obtaining the Master Replication Information”) before taking the database down, updating the configuration and taking a snapshot. For information on how to create a snapshot using raw data files, see Section 15.1.1.6, “Creating a Data Snapshot Using Raw Data Files”.

If your server is already correctly configured, obtain the master status (see Section 15.1.1.4, “Obtaining the Master Replication Information”) and then use

mysqldumpto take a snapshot (see Section 15.1.1.5, “Creating a Data Snapshot Usingmysqldump”) or take a raw snapshot of the live database using the guide in Section 15.1.1.6, “Creating a Data Snapshot Using Raw Data Files”.With the MySQL master running, create a user to be used by the slave when connecting to the master during replication. See Section 15.1.1.1, “Creating a User for Replication”.

Update the configuration of the slave, see Section 15.1.1.3, “Setting the Replication Slave Configuration”.

The next step depends on how you created the snapshot of data on the master.

If you used mysqldump:

Startup the slave, skipping replication by using the

--skip-slaveoption.Import the dump file:

shell> mysql < fulldb.dump

If you created a snapshot using the raw data files:

Extract the data files into your slave data directory. For example:

shell> tar xvf dbdump.tar

You may need to set permissions and ownership on the files to match the configuration of your slave.

Startup the slave, skipping replication by using the

--skip-slaveoption.

Configure the slave with the master status information. This will tell the slave the binary log file and position within the file where replication needs to start, and configure the login credentials and hostname of the master. For more information on the statement required, see Section 15.1.1.10, “Setting the Master Configuration on the Slave”.

Start the slave threads:

mysql>

START SLAVE;

After you have performed this procedure, the slave should connect to the master and catch up on any updates that have occurred since the snapshot was taken.

If you have forgotten to set the server-id

option for the master, slaves cannot connect to it.

If you have forgotten to set the server-id

option for the slave, you get the following error in the slave's

error log:

Warning: You should set server-id to a non-0 value if master_host is set; we will force server id to 2, but this MySQL server will not act as a slave.

You also find error messages in the slave's error log if it is not able to replicate for any other reason.

Once a slave is replicating, you can find in its data directory

one file named master.info and another

named relay-log.info. The slave uses these

two files to keep track of how much of the master's binary log

it has processed. Do not remove or edit

these files unless you know exactly what you are doing and fully

understand the implications. Even in that case, it is preferred

that you use the CHANGE MASTER TO statement

to change replication parameters. The slave will use the values

specified in the statement to update the status files

automatically.

Note

The content of master.info overrides some

of the server options specified on the command line or in

my.cnf. See

Section 15.1.2, “Replication Startup Options and Variables”, for more details.

Once you have a snapshot of the master, you can use it to set up other slaves by following the slave portion of the procedure just described. You do not need to take another snapshot of the master; you can use the same one for each slave.

If you want to add another slave to the existing replication configuration then you can do so without stopping the master. Instead, you duplicate the settings on the slaves.

To duplicate the slave:

Shut down the existing slave:

shell>

mysqladmin shutdownCopy the data directory from the existing slave to the new slave. You can do this by creating an archive using tar or

WinZip, or by performing a direct copy using a tool such as cp or rsync. Ensure you also copy the log files and relay log files.Note

A common problem that is encountered when adding new replication slaves is that the new slave fails with a series of warning and error messages like these:

071118 16:44:10 [Warning] Neither --relay-log nor --relay-log-index were used; so replication may break when this MySQL server acts as a slave and has his hostname changed!! Please use '--relay-log=

new_slave_hostname-relay-bin' to avoid this problem. 071118 16:44:10 [ERROR] Failed to open the relay log './old_slave_hostname-relay-bin.003525' (relay_log_pos 22940879) 071118 16:44:10 [ERROR] Could not find target log during relay log initialization 071118 16:44:10 [ERROR] Failed to initialize the master info structureThis is due to the fact that, if the

--relay-logoption is not specified, the relay log files contain the hostname as part of their filenames. (This is also true of the relay log index file if the--relay-log-indexoption is not used. See Section 15.1.2, “Replication Startup Options and Variables”, for more information about these options.)To avoid this problem, use the same value for

--relay-logon the new slave that was used on the existing slave. (If this option was not set explicitly on the existing slave, useexisting_slave_hostname-relay-bin--relay-log-indexoption on the new slave to match what was used on the existing slave. (If this option was not set explicitly on the existing slave, useexisting_slave_hostname-relay-bin.indexIf you have not already done so, issue a

STOP SLAVEon the new slave.If you have already started the existing slave again, issue a

STOP SLAVEon the existing slave as well.Copy the contents of the existing slave's relay log index file into the the new slave's relay log index file, making sure to overwrite any content already in the file.

Proceed with the remaining steps in this section.

Copy the

master.infoandrelay.infofiles from the existing slave to the new slave. These files hold the current log positions.Start the existing slave.

On the new slave, edit the configuration and the give the new slave a new unique

server-id.Start the new slave; the

master.infofile options will be used to start the replication process.

To set up the slave to communicate with the master for replication, you must tell the slave the necessary connection information. To do this, execute the following statement on the slave, replacing the option values with the actual values relevant to your system:

mysql>CHANGE MASTER TO->MASTER_HOST='->master_host_name',MASTER_USER='->replication_user_name',MASTER_PASSWORD='->replication_password',MASTER_LOG_FILE='->recorded_log_file_name',MASTER_LOG_POS=recorded_log_position;

Note

Replication cannot use Unix socket files. You must be able to connect to the master MySQL server using TCP/IP.

The following table shows the maximum allowable length for the string-valued options:

MASTER_HOST | 60 |

MASTER_USER | 16 |

MASTER_PASSWORD | 32 |

MASTER_LOG_FILE | 255 |

This section describes the options that you can use on slave replication servers. You can specify these options either on the command line or in an option file.

On the master and each slave, you must use the

server-id option to establish a unique

replication ID. For each server, you should pick a unique positive

integer in the range from 1 to 232

– 1, and each ID must be different from every other ID.

Example: server-id=3

Options that you can use on the master server for controlling binary logging are described in Section 5.2.3, “The Binary Log”.

Important

Some slave server replication options are ignored if a

master.info file exists when the slave

starts and contains a value for the option. The following

options are handled this way:

--master-host--master-user--master-password--master-port--master-connect-retry--master-ssl--master-ssl-ca--master-ssl-capath--master-ssl-cert--master-ssl-cipher--master-ssl-key

The master.info file format in MySQL

5.0 includes values corresponding to the SSL options.

In addition, the file format includes as its first line the number

of lines in the file. (See Section 15.4.2, “Replication Relay and Status Files”.) If you

upgrade an older server (before MySQL 4.1.1) to a newer version,

the new server upgrades the master.info file

to the new format automatically when it starts. However, if you

downgrade a newer server to an older version, you should remove

the first line manually before starting the older server for the

first time.

If no master.info file exists when the slave

server starts, it uses the values for those options that are

specified in option files or on the command line. This occurs when

you start the server as a replication slave for the very first

time, or when you have run RESET SLAVE and then

have shut down and restarted the slave.

If the master.info file exists when the slave

server starts, the server uses its contents and ignores any

options that correspond to the values listed in the file. Thus, if

you start the slave server with different values of the startup

options that correspond to values in the

master.info file, the different values have

no effect, because the server continues to use the

master.info file. To use different values,

you must either restart after removing the

master.info file or (preferably) use the

CHANGE MASTER TO statement to reset the values

while the slave is running.

Suppose that you specify this option in your

my.cnf file:

[mysqld]

master-host=some_host

The first time you start the server as a replication slave, it

reads and uses that option from the my.cnf

file. The server then records the value in the

master.info file. The next time you start the

server, it reads the master host value from the

master.info file only and ignores the value

in the option file. If you modify the my.cnf

file to specify a different master host of

some_other_host, the change still has

no effect. You should use CHANGE MASTER TO

instead.

MySQL Enterprise For expert advice regarding master startup options subscribe to the MySQL Enterprise Monitor. For more information see http://www.mysql.com/products/enterprise/advisors.html.

Because the server gives an existing

master.info file precedence over the startup

options just described, you might prefer not to use startup

options for these values at all, and instead specify them by using

the CHANGE MASTER TO statement. See

Section 12.6.2.1, “CHANGE MASTER TO Syntax”.

This example shows a more extensive use of startup options to configure a slave server:

[mysqld] server-id=2 master-host=db-master.mycompany.com master-port=3306 master-user=pertinax master-password=freitag master-connect-retry=60 report-host=db-slave.mycompany.com

The following list describes startup options for controlling

replication. Many of these options can be reset while the server

is running by using the CHANGE MASTER TO

statement. Others, such as the --replicate-*

options, can be set only when the slave server starts.

Normally, a slave does not log to its own binary log any updates that are received from a master server. This option tells the slave to log the updates performed by its SQL thread to its own binary log. For this option to have any effect, the slave must also be started with the

--log-binoption to enable binary logging.--log-slave-updatesis used when you want to chain replication servers. For example, you might want to set up replication servers using this arrangement:A -> B -> C

Here, A serves as the master for the slave B, and B serves as the master for the slave C. For this to work, B must be both a master and a slave. You must start both A and B with

--log-binto enable binary logging, and B with the--log-slave-updatesoption so that updates received from A are logged by B to its binary log.This option causes a server to print more messages to the error log about what it is doing. With respect to replication, the server generates warnings that it succeeded in reconnecting after a network/connection failure, and informs you as to how each slave thread started. This option is enabled by default; to disable it, use

--skip-log-warnings. Aborted connections are not logged to the error log unless the value is greater than 1.--master-connect-retry=secondsThe number of seconds that the slave thread sleeps before trying to reconnect to the master in case the master goes down or the connection is lost. The value in the

master.infofile takes precedence if it can be read. If not set, the default is 60. Connection retries are not invoked until the slave times out reading data from the master according to the value of--slave-net-timeout. The number of reconnection attempts is limited by the--master-retry-countoption.The hostname or IP number of the master replication server. The value in

master.infotakes precedence if it can be read. If no master host is specified, the slave thread does not start.The name to use for the file in which the slave records information about the master. The default name is

master.infoin the data directory.The password of the account that the slave thread uses for authentication when it connects to the master. The value in the

master.infofile takes precedence if it can be read. If not set, an empty password is assumed.The TCP/IP port number that the master is listening on. The value in the

master.infofile takes precedence if it can be read. If not set, the compiled-in setting is assumed (normally 3306).The number of times that the slave tries to connect to the master before giving up. Reconnects are attempted at intervals set by

--master-connect-retryand reconnects are triggered when data reads by the slave time out according to the--slave-net-timeoutoption. The default value is 86400.--master-ssl,--master-ssl-ca=,file_name--master-ssl-capath=,directory_name--master-ssl-cert=,file_name--master-ssl-cipher=,cipher_list--master-ssl-key=file_nameThese options are used for setting up a secure replication connection to the master server using SSL. Their meanings are the same as the corresponding

--ssl,--ssl-ca,--ssl-capath,--ssl-cert,--ssl-cipher,--ssl-keyoptions that are described in Section 5.5.7.3, “SSL Command Options”. The values in themaster.infofile take precedence if they can be read.The username of the account that the slave thread uses for authentication when it connects to the master. This account must have the

REPLICATION SLAVEprivilege. The value in themaster.infofile takes precedence if it can be read. If the master username is not set, the nametestis assumed.The size at which the server rotates relay log files automatically. For more information, see Section 15.4.2, “Replication Relay and Status Files”. The default size is 1GB.

When this option is given, the server allows no updates except from users that have the

SUPERprivilege or (on a slave server) from updates performed by slave threads. On a slave server, this can be useful to ensure that the slave accepts updates only from its master server and not from clients. As of MySQL 5.0.16, this option does not apply toTEMPORARYtables.The basename for the relay log. The default basename is

host_name-relay-binmax_relay_log_size) and you need to put them in some area different from the data directory, or if you want to increase speed by balancing load between disks.The name to use for the relay log index file. The default name is

host_name-relay-bin.indexhost_nameis the name of the slave server.--relay-log-info-file=file_nameThe name to use for the file in which the slave records information about the relay logs. The default name is

relay-log.infoin the data directory.Disable or enable automatic purging of relay logs as soon as they are not needed any more. The default value is 1 (enabled). This is a global variable that can be changed dynamically with

SET GLOBAL relay_log_purge =.NThis option places an upper limit on the total size in bytes of all relay logs on the slave. A value of 0 means “no limit.” This is useful for a slave server host that has limited disk space. When the limit is reached, the I/O thread stops reading binary log events from the master server until the SQL thread has caught up and deleted some unused relay logs. Note that this limit is not absolute: There are cases where the SQL thread needs more events before it can delete relay logs. In that case, the I/O thread exceeds the limit until it becomes possible for the SQL thread to delete some relay logs, because not doing so would cause a deadlock. You should not set

--relay-log-space-limitto less than twice the value of--max-relay-log-size(or--max-binlog-sizeif--max-relay-log-sizeis 0). In that case, there is a chance that the I/O thread waits for free space because--relay-log-space-limitis exceeded, but the SQL thread has no relay log to purge and is unable to satisfy the I/O thread. This forces the I/O thread to temporarily ignore--relay-log-space-limit.Tell the slave to restrict replication to statements where the default database (that is, the one selected by

USE) isdb_name. To specify more than one database, use this option multiple times, once for each database. Note that this does not replicate cross-database statements such asUPDATEwhile having selected a different database or no database.some_db.some_tableSET foo='bar'Warning

To specify multiple databases you must use multiple instances of this option. Because database names can contain commas, if you supply a comma separated list then the list will be treated as the name of a single database.

An example of what does not work as you might expect: If the slave is started with

--replicate-do-db=salesand you issue the following statements on the master, theUPDATEstatement is not replicated:USE prices; UPDATE sales.january SET amount=amount+1000;

The main reason for this “just check the default database” behavior is that it is difficult from the statement alone to know whether it should be replicated (for example, if you are using multiple-table

DELETEstatements or multiple-tableUPDATEstatements that act across multiple databases). It is also faster to check only the default database rather than all databases if there is no need.If you need cross-database updates to work, use

--replicate-wild-do-table=instead. See Section 15.4.3, “How Servers Evaluate Replication Rules”.db_name.%--replicate-do-table=db_name.tbl_nameTell the slave thread to restrict replication to the specified table. To specify more than one table, use this option multiple times, once for each table. This works for cross-database updates, in contrast to

--replicate-do-db. See Section 15.4.3, “How Servers Evaluate Replication Rules”.Tells the slave to not replicate any statement where the default database (that is, the one selected by

USE) isdb_name. To specify more than one database to ignore, use this option multiple times, once for each database. You should not use this option if you are using cross-database updates and you do not want these updates to be replicated. See Section 15.4.3, “How Servers Evaluate Replication Rules”.MySQL Enterprise For expert advice regarding slave startup options subscribe to the MySQL Enterprise Monitor. For more information see http://www.mysql.com/products/enterprise/advisors.html.

An example of what does not work as you might expect: If the slave is started with

--replicate-ignore-db=salesand you issue the following statements on the master, theUPDATEstatement is replicated:USE prices; UPDATE sales.january SET amount=amount+1000;

Note

In the preceding example the statement is replicated because

--replicate-ignore-dbonly applies to the default database (set through theUSEstatement). Because thesalesdatabase was specified explicitly in the statement, the statement has not been filtered.If you need cross-database updates to work, use

--replicate-wild-ignore-table=instead. See Section 15.4.3, “How Servers Evaluate Replication Rules”.db_name.%--replicate-ignore-table=db_name.tbl_nameTells the slave thread to not replicate any statement that updates the specified table, even if any other tables might be updated by the same statement. To specify more than one table to ignore, use this option multiple times, once for each table. This works for cross-database updates, in contrast to

--replicate-ignore-db. See Section 15.4.3, “How Servers Evaluate Replication Rules”.--replicate-rewrite-db=from_name->to_nameTells the slave to translate the default database (that is, the one selected by

USE) toto_nameif it wasfrom_nameon the master. Only statements involving tables are affected (not statements such asCREATE DATABASE,DROP DATABASE, andALTER DATABASE), and only iffrom_nameis the default database on the master. This does not work for cross-database updates. To specify multiple rewrites, use this option multiple times. The server uses the first one with afrom_namevalue that matches. The database name translation is done before the--replicate-*rules are tested.If you use this option on the command line and the “

>” character is special to your command interpreter, quote the option value. For example:shell>

mysqld --replicate-rewrite-db="olddb->newdb"To be used on slave servers. Usually you should use the default setting of 0, to prevent infinite loops caused by circular replication. If set to 1, the slave does not skip events having its own server ID. Normally, this is useful only in rare configurations. Cannot be set to 1 if

--log-slave-updatesis used. Note that by default the slave I/O thread does not even write binary log events to the relay log if they have the slave's server id (this optimization helps save disk usage). So if you want to use--replicate-same-server-id, be sure to start the slave with this option before you make the slave read its own events that you want the slave SQL thread to execute.--replicate-wild-do-table=db_name.tbl_nameTells the slave thread to restrict replication to statements where any of the updated tables match the specified database and table name patterns. Patterns can contain the “

%” and “_” wildcard characters, which have the same meaning as for theLIKEpattern-matching operator. To specify more than one table, use this option multiple times, once for each table. This works for cross-database updates. See Section 15.4.3, “How Servers Evaluate Replication Rules”.Example:

--replicate-wild-do-table=foo%.bar%replicates only updates that use a table where the database name starts withfooand the table name starts withbar.If the table name pattern is

%, it matches any table name and the option also applies to database-level statements (CREATE DATABASE,DROP DATABASE, andALTER DATABASE). For example, if you use--replicate-wild-do-table=foo%.%, database-level statements are replicated if the database name matches the patternfoo%.To include literal wildcard characters in the database or table name patterns, escape them with a backslash. For example, to replicate all tables of a database that is named

my_own%db, but not replicate tables from themy1ownAABCdbdatabase, you should escape the “_” and “%” characters like this:--replicate-wild-do-table=my\_own\%db. If you're using the option on the command line, you might need to double the backslashes or quote the option value, depending on your command interpreter. For example, with the bash shell, you would need to type--replicate-wild-do-table=my\\_own\\%db.--replicate-wild-ignore-table=db_name.tbl_nameTells the slave thread not to replicate a statement where any table matches the given wildcard pattern. To specify more than one table to ignore, use this option multiple times, once for each table. This works for cross-database updates. See Section 15.4.3, “How Servers Evaluate Replication Rules”.

Example:

--replicate-wild-ignore-table=foo%.bar%does not replicate updates that use a table where the database name starts withfooand the table name starts withbar.For information about how matching works, see the description of the

--replicate-wild-do-tableoption. The rules for including literal wildcard characters in the option value are the same as for--replicate-wild-ignore-tableas well.The hostname or IP number of the slave to be reported to the master during slave registration. This value appears in the output of

SHOW SLAVE HOSTSon the master server. Leave the value unset if you do not want the slave to register itself with the master. Note that it is not sufficient for the master to simply read the IP number of the slave from the TCP/IP socket after the slave connects. Due to NAT and other routing issues, that IP may not be valid for connecting to the slave from the master or other hosts.The account password of the slave to be reported to the master during slave registration. This value appears in the output of

SHOW SLAVE HOSTSon the master server if the--show-slave-auth-infooption is given.The TCP/IP port number for connecting to the slave, to be reported to the master during slave registration. Set this only if the slave is listening on a non-default port or if you have a special tunnel from the master or other clients to the slave. If you are not sure, do not use this option.

The account username of the slave to be reported to the master during slave registration. This value appears in the output of

SHOW SLAVE HOSTSon the master server if the--show-slave-auth-infooption is given.Display slave usernames and passwords in the output of

SHOW SLAVE HOSTSon the master server for slaves started with the--report-userand--report-passwordoptions.Tells the slave server not to start the slave threads when the server starts. To start the threads later, use a

START SLAVEstatement.--slave_compressed_protocol={0|1}If this option is set to 1, use compression for the slave/master protocol if both the slave and the master support it. The default is 0 (no compression).

The name of the directory where the slave creates temporary files. This option is by default equal to the value of the

tmpdirsystem variable. When the slave SQL thread replicates aLOAD DATA INFILEstatement, it extracts the file to be loaded from the relay log into temporary files, and then loads these into the table. If the file loaded on the master is huge, the temporary files on the slave are huge, too. Therefore, it might be advisable to use this option to tell the slave to put temporary files in a directory located in some filesystem that has a lot of available space. In that case, the relay logs are huge as well, so you might also want to use the--relay-logoption to place the relay logs in that filesystem.The directory specified by this option should be located in a disk-based filesystem (not a memory-based filesystem) because the temporary files used to replicate

LOAD DATA INFILEmust survive machine restarts. The directory also should not be one that is cleared by the operating system during the system startup process.The number of seconds to wait for more data from the master before the slave considers the connection broken, aborts the read, and tries to reconnect. The first retry occurs immediately after the timeout. The interval between retries is controlled by the

--master-connect-retryoption and the number of reconnection attempts is limited by the--master-retry-countoption. The default is 3600 seconds (one hour).--slave-skip-errors=[err_code1,err_code2,...|all]Normally, replication stops when an error occurs on the slave. This gives you the opportunity to resolve the inconsistency in the data manually. This option tells the slave SQL thread to continue replication when a statement returns any of the errors listed in the option value.

Do not use this option unless you fully understand why you are getting errors. If there are no bugs in your replication setup and client programs, and no bugs in MySQL itself, an error that stops replication should never occur. Indiscriminate use of this option results in slaves becoming hopelessly out of synchrony with the master, with you having no idea why this has occurred.

For error codes, you should use the numbers provided by the error message in your slave error log and in the output of

SHOW SLAVE STATUS. Appendix B, Errors, Error Codes, and Common Problems, lists server error codes.You can also (but should not) use the very non-recommended value of

allto cause the slave to ignore all error messages and keeps going regardless of what happens. Needless to say, if you useall, there are no guarantees regarding the integrity of your data. Please do not complain (or file bug reports) in this case if the slave's data is not anywhere close to what it is on the master. You have been warned.Examples:

--slave-skip-errors=1062,1053 --slave-skip-errors=all

Once replication has been started it should execute without requiring much regular administration. Depending on your replication environment, you will want to check the replication status of each slave either periodically, daily, or even more frequently.

MySQL Enterprise For regular reports regarding the status of your slaves, subscribe to the MySQL Network Monitoring and Advisory Service. For more information see http://www.mysql.com/products/enterprise/advisors.html.

The most common task when managing a replication process is to ensure that replication is taking place and that there have been no errors between the slave and the master.

The primary command for this is SHOW SLAVE

STATUS which you must execute on each slave:

mysql> SHOW SLAVE STATUS\G

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: master1

Master_User: root

Master_Port: 3306

Connect_Retry: 60

Master_Log_File: mysql-bin.000004

Read_Master_Log_Pos: 931

Relay_Log_File: slave1-relay-bin.000056

Relay_Log_Pos: 950

Relay_Master_Log_File: mysql-bin.000004

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Replicate_Do_DB:

Replicate_Ignore_DB:

Replicate_Do_Table:

Replicate_Ignore_Table:

Replicate_Wild_Do_Table:

Replicate_Wild_Ignore_Table:

Last_Errno: 0

Last_Error:

Skip_Counter: 0

Exec_Master_Log_Pos: 931

Relay_Log_Space: 1365

Until_Condition: None

Until_Log_File:

Until_Log_Pos: 0

Master_SSL_Allowed: No

Master_SSL_CA_File:

Master_SSL_CA_Path:

Master_SSL_Cert:

Master_SSL_Cipher:

Master_SSL_Key:

Seconds_Behind_Master: 0

1 row in set (0.01 sec)The key fields from the status report to examine are:

Slave_IO_State— indicates the current status of the slave. See Section 7.5.5.5, “Replication Slave I/O Thread States”, and Section 7.5.5.6, “Replication Slave SQL Thread States”, for more information.Slave_IO_Running— shows whether the IO thread for the reading the master's binary log is running.Slave_SQL_Running— shows whether the SQL thread for the executing events in the relay log is running.Last_Error— shows the last error registered when processing the relay log. Ideally this should be blank, indicating no errors.Seconds_Behind_Master— shows the number of seconds that the slave SQL thread is behind processing the master binary log. A high number (or an increasing one) can indicate that the slave is unable to cope with the large number of queries from the master.A value of 0 for

Seconds_Behind_Mastercan usually be interpreted as meaning that the slave has caught up with the master, but there are some cases where this is not strictly true. For example, this can occur if the network connection between master and slave is broken but the slave I/O thread has not yet noticed this — that is,slave_net_timeouthas not yet elapsed.It is also possible that transient values for

Seconds_Behind_Mastermay not reflect the situation accurately. When the slave SQL thread has caught up on I/O,Seconds_Behind_Masterdisplays 0; but when the slave I/O thread is still queuing up a new event,Seconds_Behind_Mastermay show a large value until the SQL thread finishes executing the new event. This is especially likely when the events have old timestamps; in such cases, if you executeSHOW SLAVE STATUSseveral times in a relatively short peiod, you may see this value change back and forth repeatedly between 0 and a relatively large value.

On the master, you can check the status of slaves by examining

the list of running processes. Slaves execute the

Binlog Dump command:

mysql> SHOW PROCESSLIST \G;

*************************** 4. row ***************************

Id: 10

User: root

Host: slave1:58371

db: NULL

Command: Binlog Dump

Time: 777

State: Has sent all binlog to slave; waiting for binlog to be updated

Info: NULLBecause it is the slave that drives the core of the replication process, very little information is available in this report.

If you have used the --report-host option,

then the SHOW SLAVE HOSTS statement will show

basic information about connected slaves:

mysql> SHOW SLAVE HOSTS; +-----------+--------+------+-------------------+-----------+ | Server_id | Host | Port | Rpl_recovery_rank | Master_id | +-----------+--------+------+-------------------+-----------+ | 10 | slave1 | 3306 | 0 | 1 | +-----------+--------+------+-------------------+-----------+ 1 row in set (0.00 sec)

The output includes the ID of the slave server, the value of the

--report-host option, the connecting port,

master ID and the priority of the slave for receiving binary log

updates.

You can stop and start the replication of statements on the

slave using the STOP SLAVE and START

SLAVE commands.

To stop execution of the binary log from the master, use

STOP SLAVE:

mysql> STOP SLAVE;

When execution is stopped, the slave does not read the binary

log from the master (the IO_THREAD) and stops

processing events from the relay log that have not yet been

executed (the SQL_THREAD). You can pause

either the IO or SQL threads individually by specifying the

thread type. For example:

mysql> STOP SLAVE IO_THREAD;

Stopping the SQL thread can be useful if you want to perform a backup or other task on a slave that only processes events from the master. The IO thread will continue to be read from the master, but not executed, which will make it easier for the slave to catch up when you start slave operations again.

Stopping the IO thread will allow the statements in the relay log to be executed up until the point where the relay log has ceased to receive new events. Using this option can be useful when you want to pause execution to allow the slave to catch up with events from the master, when you want to perform administration on the slave but also ensure you have the latest updates to a specific point. This method can also be used to pause execution on the slave while you conduct administration on the master while ensuring that there is not a massive backlog of events to be executed when replication is started again.

To start execution again, use the START SLAVE

statement:

mysql> START SLAVE;

If necessary, you can start either the

IO_THREAD or SQL_THREAD

threads individually.

- 15.2.1. Using Replication for Backups

- 15.2.2. Using Replication with Different Master and Slave Storage Engines

- 15.2.3. Using Replication for Scale-Out

- 15.2.4. Replicating Different Databases to Different Slaves

- 15.2.5. Improving Replication Performance

- 15.2.6. Switching Masters During Failover

- 15.2.7. Setting Up Replication Using SSL

Replication can be used in many different environments for a range of purposes. In this section you will find general notes and advice on using replication for specific solution types.

For information on using replication in a backup environment, including notes on the setup, backup procedure, and files to back up, see Section 15.2.1, “Using Replication for Backups”.

For advice and tips on using different storage engines on the master and slaves, see Section 15.2.2, “Using Replication with Different Master and Slave Storage Engines”.

Using replication as a scale-out solution requires some changes in the logic and operation of applications that use the solution. See Section 15.2.3, “Using Replication for Scale-Out”.

For performance or data distribution reasons you may want to replicate different databases to different replication slaves. See Section 15.2.4, “Replicating Different Databases to Different Slaves”

As the number of replication slaves increases, the load on the master can increase (because of the need to replicate the binary log to each slave) and lead to a reduction in performance of the master. For tips on improving your replication performance, including using a single secondary server as an replication master, see Section 15.2.5, “Improving Replication Performance”.

For guidance on switching masters, or converting slaves into masters as part of an emergency failover solution, see Section 15.2.6, “Switching Masters During Failover”.

To secure your replication communication you can encrypt the communication channel by using SSL to exchange data. Step-by-step instructions can be found in Section 15.2.7, “Setting Up Replication Using SSL”.

You can use replication as a backup solution by replicating data from the master to a slave, and then backing up the data slave. Because the slave can be paused and shut down without affecting the running operation of the master you can produce an effective snapshot of 'live' data that would otherwise require a shutdown of the master database.

How you back up the database will depend on the size of the database and whether you are backing up only the data, or the data and the replication slave state so that you can rebuild the slave in the event of failure. There are therefore two choices:

If you are using replication as a solution to enable you to back

up the data on the master, and the size of your database is not

too large, then the mysqldump tool may be

suitable. See

Section 15.2.1.1, “Backing Up a Slave Using mysqldump”.

For larger databases, where mysqldump would be

impractical or inefficient, you can back up the raw data files

instead. Using the raw data files option also means that you can

back up the binary and relay logs that will enable you to recreate

the slave in the event of a slave failure. For more information,

see Section 15.2.1.2, “Backing Up Raw Data from a Slave”.

Using mysqldump to create a copy of the database enables you to capture all of the data in the database in a format that allows the information to be imported into another instance of MySQL. Because the format of the information is SQL statements the file can easily be distributed and applied to running servers in the event that you need access to the data in an emergency. However, if the size of your data set is very large then mysqldump may be impractical.

When using mysqldump you should stop the slave before starting the dump process to ensure that the dump contains a consistent set of data:

Stop the slave from processing requests. You can either stop the slave completely using mysqladmin:

shell>

mysqladmin stop-slaveAlternatively, you can stop processing the relay log files by stopping the replication SQL thread. Using this method will allow the binary log data to be transferred. Within busy replication environments this may speed up the catch-up process when you start the slave processing again:

shell>

mysql -e 'STOP SLAVE SQL_THREAD;'Run mysqldump to dump your databases. You may either select databases to be dumped, or dump all databases. For more information see Section 4.5.4, “mysqldump — A Database Backup Program”. For example, to dump all databases:

shell>

mysqldump --all-databases >fulldb.dumpOnce the dump has completed, start slave operations again:

shell>

mysqladmin start-slave

In the preceding example you may want to add login credentials (username, password) to the commands, and bundle the process up into a script that you can run automatically each day.

If you use this approach, make sure you monitor the slave replication process to ensure that the time taken to run the backup in this way is not affecting the slave's ability to keep up with events from the master. See Section 15.1.3.1, “Checking Replication Status”. If the slave is unable to keep up you may want to add another server and distribute the backup process. For an example of how to configure this scenario, see Section 15.2.4, “Replicating Different Databases to Different Slaves”.

To guarantee the integrity of the files that are copied, backing up the raw data files on your MySQL replication slave should take place while your slave server is shut down. If the MySQL server is still running then background tasks, particularly with storage engines with background processes such as InnoDB, may still be updating the database files. With InnoDB, these problems should be resolved during crash recovery, but since the slave server can be shut down during the backup process without affecting the execution of the master it makes sense to take advantage of this facility.

To shut down the server and back up the files:

Shut down the slave MySQL server:

shell>

mysqladmin shutdownCopy the data files. You can use any suitable copying or archive utility, including cp, tar or WinZip:

shell>

tar cf /tmp/dbbackup.tar ./dataStart up the mysqld process again:

shell>

mysqld_safe &Under Windows:

C:\>

"C:\Program Files\MySQL\MySQL Server 5.0\bin\mysqld"

Normally you should back up the entire data folder for the slave

MySQL server. If you want to be able to restore the data and

operate as a slave (for example, in the event of failure of the

slave), then when you back up the slave's data, you should back

up the slave status files, master.info and

relay.info, along with the relay log files.

These files are needed to resume replication after you restore

the slave's data.

If you lose the relay logs but still have the

relay-log.info file, you can check it to

determine how far the SQL thread has executed in the master

binary logs. Then you can use CHANGE MASTER

TO with the MASTER_LOG_FILE and

MASTER_LOG_POS options to tell the slave to

re-read the binary logs from that point. Of course, this

requires that the binary logs still exist on the master server.

If your slave is subject to replicating LOAD DATA

INFILE statements, you should also back up any

SQL_LOAD-* files that exist in the

directory that the slave uses for this purpose. The slave needs

these files to resume replication of any interrupted

LOAD DATA INFILE operations. The directory

location is specified using the

--slave-load-tmpdir option. If this option is

not specified, the directory location is the value of the

tmpdir system variable.

The replication process does not care if the source table on the

master and the replicated table on the slave use different engine

types. In fact, the system variables

storage_engine and

table_type are not replicated.

This provides a number of advantages in the replication process in

that you can take advantage of different engine types for

different replication scenarios. For example, in a typical

scaleout scenario (see

Section 15.2.3, “Using Replication for Scale-Out”), you want to use

InnoDB tables on the master to take advantage

of the transactional functionality, but use

MyISAM on the slaves where transaction support

is not required because the data is only read. When using

replication in a data logging environment you may want to use the

Archive storage engine on the slave.

Setting up different engines on the master and slave depends on how you set up the initial replication process:

If you used

mysqldumpto create the database snapshot on your master then you could edit the dump text to change the engine type used on each table.Another alternative for

mysqldumpis to disable engine types that you do not want to use on the slave before using the dump to build the data on the slave. For example, you can add the--skip-innodboption on your slave to disable theInnoDBengine. If a specific engine does not exist, MySQL will use the default engine type, usuallyMyISAM. If you want to disable further engines in this way, you may want to consider building a special binary to be used on the slave that only supports the engines you want.If you are using raw data files for the population of the slave, you will be unable to change the initial table format. Instead, use

ALTER TABLEto change the table types after the slave has been started.For new master/slave replication setups where there are currently no tables on the master, avoid specifying the engine type when creating new tables.

If you are already running a replication solution and want to convert your existing tables to another engine type, follow these steps:

Stop the slave from running replication updates:

mysql> STOP SLAVE;

This will enable you to change engine types without interruptions.

Execute an

ALTER TABLE ... Engine='for each table where you want to change the engine type.enginetype'Start the slave replication process again:

mysql> START SLAVE;

Although the storage_engine and

table_type variables are not replicated, be

aware that CREATE TABLE and ALTER

TABLE statements that include the engine specification

will be correctly replicated to the slave. For example, if you

have a CSV table and you execute:

mysql> ALTER TABLE csvtable Engine='MyISAM';

The above statement will be replicated to the slave and the engine

type on the slave will be converted to MyISAM,

even if you have previously changed the table type on the slave to

an engine other than CSV. If you want to retain engine differences

on the master and slave, you should be careful to use the

storage_engine variable on the master when

creating a new table. For example, instead of:

mysql> CREATE TABLE tablea (columna int) Engine=MyISAM;

Use this format:

mysql> SET storage_engine=MyISAM; mysql> CREATE TABLE tablea (columna int);

When replicated, the storage_engine variable

will be ignored, and the CREATE TABLE statement

will be executed with the slave's default engine type.

You can use replication as a scale-out solution, i.e. where you want to split up the load of database queries across multiple database servers, within some reasonable limitations.

Because replication works from the distribution of one master to one or more slaves, using replication for scaleout works best in an environment where you have a high number of reads and low number of writes/updates. Most websites fit into this category, where users are browsing the website, reading articles, posts, or viewing products. Updates only occur during session management, or when making a purchase or adding a comment/message to a forum.

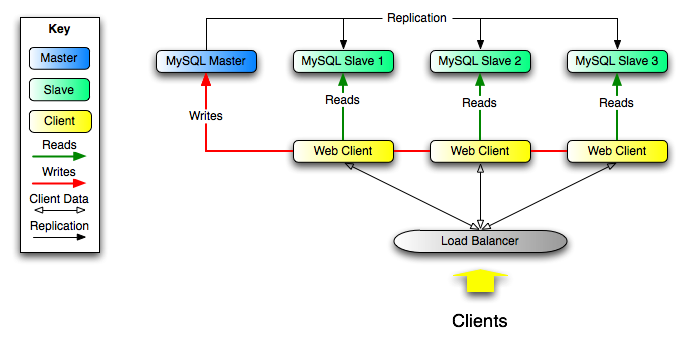

Replication in this situation enables you to distribute the reads over the replication slaves, while still allowing your web servers to communicate with the replication master when a write is required. You can see a sample replication layout for this scenario in Figure 15.1, “Using replication to improve the performance during scaleout”.

If the part of your code that is responsible for database access has been properly abstracted/modularized, converting it to run with a replicated setup should be very smooth and easy. Change the implementation of your database access to send all writes to the master, and to send reads to either the master or a slave. If your code does not have this level of abstraction, setting up a replicated system gives you the opportunity and motivation to clean it up. Start by creating a wrapper library or module that implements the following functions:

safe_writer_connect()safe_reader_connect()safe_reader_statement()safe_writer_statement()

safe_ in each function name means that the

function takes care of handling all error conditions. You can use

different names for the functions. The important thing is to have

a unified interface for connecting for reads, connecting for

writes, doing a read, and doing a write.

Then convert your client code to use the wrapper library. This may be a painful and scary process at first, but it pays off in the long run. All applications that use the approach just described are able to take advantage of a master/slave configuration, even one involving multiple slaves. The code is much easier to maintain, and adding troubleshooting options is trivial. You need modify only one or two functions; for example, to log how long each statement took, or which statement among those issued gave you an error.

If you have written a lot of code, you may want to automate the conversion task by using the replace utility that comes with standard MySQL distributions, or write your own conversion script. Ideally, your code uses consistent programming style conventions. If not, then you are probably better off rewriting it anyway, or at least going through and manually regularizing it to use a consistent style.

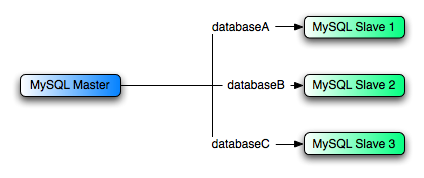

There may be situations where you have a single master and want to replicate different databases to different slaves. For example, you may want to distribute different sales data to different departments to help spread the load during data analysis. A sample of this layout is shown in Figure 15.2, “Using replication to replicate separate DBs to multiple hosts”.

You can achieve this separation by configuring the master and

slaves as normal, and then limiting the binary log statements that

each slave processes by using the

replicate-wild-do-table configuration option on

each slave.

For example, to support the separation as shown in

Figure 15.2, “Using replication to replicate separate DBs to multiple hosts”, you would configure

each slave as follows before enabling replication using

START SLAVE:

MySQL Slave 1 should have the following configuration options:

replicate-wild-do-table=sales.% replicate-wild-do-table=finance.%

MySQL Slave 2 should have the following configuration option:

replicate-wild-do-table=support.%

MySQL Slave 3 should have the following configuration option:

replicate-wild-do-table=service.%

If you have data that needs to be synchronized to the slaves before replication starts, you have a number of options:

Synchronize all the data to each slave, and delete the databases and/or tables that you do not want to keep.

Use

mysqldumpto create a separate dump file for each database and load the appropriate dump file on each slave.Use a raw data file dump and include only the specific files and databases that you need for each slave. This option will not work with InnoDB databases unless you use the

innodb_file_per_tableoption.

Each slave in this configuration will transfer to the entire binary log from the master, but will only execute the events within the binary log that apply to the configured databases and tables.

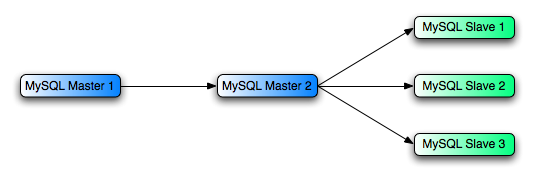

As the number of slaves connecting to a master increases, the load, although minimal, also increases, as each slave uses up a client connection to the master. Also, as each slave must receive a full copy of the master binary log, the network load on the master may also increase and start to create a bottleneck.

If you are using a large number of slaves connected to one master, and that master is also busy processing requests (for example, as part of a scaleout solution), then you may want to improve the performance of the replication process.

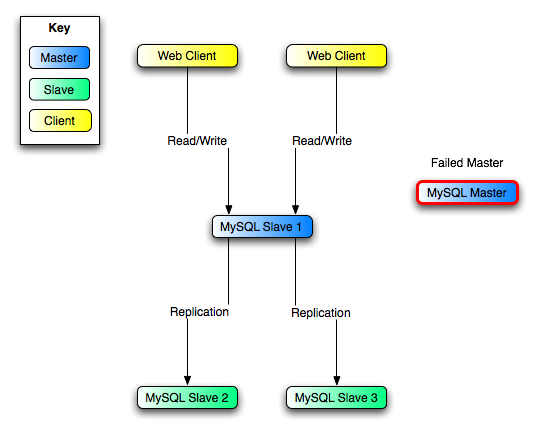

One way to improve the performance of the replication process is to create a deeper replication structure that enables the master to replicate to only one slave, and for the remaining slaves to connect to this primary slave for their individual replication requirements. A sample of this structure is shown in Figure 15.3, “Using an additional replication host to improve performance”.

For this to work, you must configure the MySQL instances as follows:

Master 1 is the primary master where all changes and updates are written to the database. Binary logging should be enabled on this machine.